Google I/O 2025: The New AI-native Google

Explore how Google I/O 2025 is ushering in the Age of Gemini with AI-driven updates across Google’s ecosystem, and see how FlowHunt brings the latest Gemini 2.5 Flash model to your AI projects.

The Gemini Era

Project Astra & Live API: Making AI Feel Natural

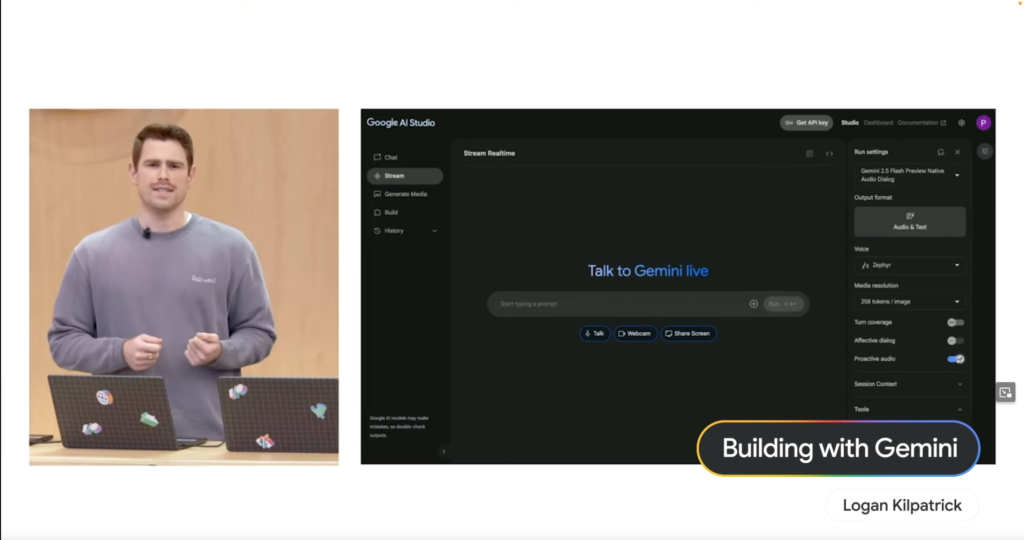

Logan Kilpatrick kicked things off by showcasing how Project Astra aims to make AI interaction feel completely natural. Many of these capabilities are now accessible through the Live API, powered by the new Gemini 2.5 Flash native audio model. This model is more adept at ignoring stray sounds and natively supports 24 languages, paving the way for more intuitive and responsive AI experiences.

Building with Gemini: Real-Time Multimodal Interaction

Paige Bailey demonstrated the power of building with Gemini through a “Keynote Companion” demo. Using Google AI Studio, she showcased how the AI could understand spoken commands, interact with live data (like showing Shoreline Amphitheatre on a map), and even perform complex searches like finding nearby coffee houses with Wi-Fi, all in a conversational flow. This highlights Gemini’s impressive multimodal capabilities.

Android Evolves: Adaptive UIs, XR, and In-IDE AI Assistance

Adaptive by Design: Compose and Android XR

Diana Wong emphasized Google’s commitment to making adaptive UIs easier to build. New features in the Compose Adaptive Layouts library, like Pane Expansion, are designed to help developers create apps that seamlessly adjust to various screen sizes and form factors. This adaptive philosophy extends directly to Android XR, the extended reality platform built in collaboration with Samsung. Developers can start building for upcoming headsets like Project Moohan right now, knowing their adaptively designed apps will be ready for this new immersive frontier.

Smarter Coding: AI Agents in Android Studio

Florina Muntenescu unveiled a game-changer for Android developers: a new AI agent coming soon to Android Studio. This agent is designed to assist with tedious tasks like version updates. In a compelling demo, the AI agent analyzed an old project, identified build failures, and then leveraged Gemini to figure out how to fix the problems, iterating until the build was successful. This promises to significantly streamline the development workflow.

The Web Gets Smarter: On-Device AI and Enhanced UI Building

Streamlined Web UIs: New Capabilities for Complex Elements

Una Kravets highlighted new web capabilities that simplify the creation of common yet surprisingly complex UI elements. Developers will find it easier to build robust and accessible components like carousels and hover cards, enhancing user experiences across the web.

Gemini Nano on the Web: Multimodal AI APIs Unleashed

Addy Osmani announced that Gemini Nano is unlocking new multimodal capabilities directly on the web. New multimodal built-in AI APIs will allow users to interact with Gemini using both audio and image input. A “Cinemal” demo showcased this by allowing a user to take a photo of a ticket with their webcam, and the on-device AI instantly located their seat section in a theater map – a powerful example of on-device processing.

Firebase & AI: Supercharging App Development

David East demonstrated how Firebase is integrating AI to accelerate app development. Developers can now bring their Figma designs to life in Firebase Studio with help from Builder.io. The impressive part? This isn’t just a monolithic code dump; the Figma export generates well-isolated, individual React components. Using Gemini within Firebase Studio, David showed how he could then easily ask the AI to add features like an “Add to Cart” button to a product detail page, which the AI promptly implemented, updating the code and the live web preview.

Democratizing AI: New Open Models – Gemma 3n & SignGemma

Gemma 3n: Powerful AI on Just 2GB RAM

Gus Martins delivered exciting news for the open-source community with the announcement of Gemma 3n. This incredibly efficient model can run on as little as 2GB of RAM, making it much faster and leaner for mobile hardware compared to Gemma 3. Crucially, Gemma 3n now includes audio understanding, making it truly multimodal.

SignGemma: Bridging Communication Gaps

Google is also pushing the boundaries of accessibility with SignGemma. This new family of models is trained to translate sign language (with a current focus on American Sign Language – ASL) into spoken language text, opening up new avenues for communication and inclusivity.

New Gemini 2.5 Flash in FlowHunt

Inspired by the incredible AI advancements at Google I/O? Want to start creating sophisticated AI Agents that can understand, reason, and act?

FlowHunt is the AI-powered platform that helps you create powerful AI Agents seamlessly, without needing to be a machine learning expert. Design complex workflows, integrate various tools, and deploy intelligent agents with ease.

And the great news? As highlighted by the power of new models in the keynote, FlowHunt now supports the groundbreaking Gemini 2.5 Flash! This means you can immediately start leveraging its speed, efficiency, and enhanced audio capabilities to build even more powerful and responsive AI Agents.

Try Gemini 2.5 Flash in FlowHunt

Try the new models implemented less than 24 hours in FlowHunt right away!

Frequently asked questions

- What is the main focus of Google I/O 2025?

Google I/O 2025 highlights the company's shift to an AI-native future, with Gemini models at the core of new products and features across Android, the web, and developer tools.

- What is Gemini 2.5 Flash?

Gemini 2.5 Flash is Google’s latest native audio AI model, offering fast, efficient, and multilingual audio processing, now available for developers and integrated into platforms like FlowHunt.

- What are Gemma 3n and SignGemma?

Gemma 3n is an efficient AI model running on as little as 2GB RAM with multimodal (audio) capabilities. SignGemma is a family of models that translates sign language, focusing on ASL, into spoken language text.

- How is FlowHunt leveraging the new Gemini models?

FlowHunt now supports Gemini 2.5 Flash, allowing users to create advanced AI Agents with improved audio and multimodal capabilities, without requiring machine learning expertise.

- What are the new AI features in Android and web development?

Android introduced adaptive UIs, XR support, and AI agents in Android Studio for smarter coding. On the web, Gemini Nano enables multimodal AI APIs for audio and image input directly in browsers.

Yasha is a talented software developer specializing in Python, Java, and machine learning. Yasha writes technical articles on AI, prompt engineering, and chatbot development.

Try Gemini 2.5 Flash in FlowHunt

Experience the power of the new Gemini 2.5 Flash model in FlowHunt and build next-generation AI Agents with advanced multimodal capabilities.