The 12-Factor AI Agent: Building Effective AI Systems That Scale

Discover the 12 factors for building robust, scalable AI agents: from natural language conversion and prompt ownership, to human collaboration and stateless design. Build production-ready AI systems that deliver real business value.

What Makes an Effective AI Agent?

Before diving into the factors, let’s clarify what we mean by “AI agents.” At their core, these are systems that can interpret natural language requests, make decisions based on context, and execute specific actions through tools or APIs—all while maintaining coherent, ongoing interactions.

The most powerful agents combine the reasoning capabilities of language models with the reliability of deterministic code. But striking this balance requires careful design choices, which is exactly what these factors address.

The 12 Factors for Building Robust AI Agents

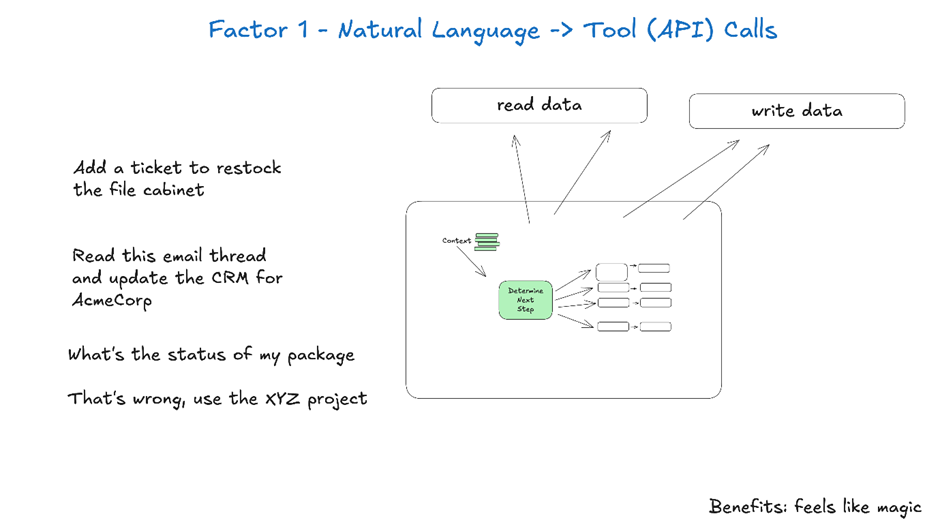

1. Master Natural Language to Tool Call Conversion

The ability to transform natural language requests into structured tool calls sits at the heart of agent functionality. This is what enables an agent to take a simple command like “create a payment link for $750 to Terri for the February AI Tinkerers meetup” and convert it into a properly formatted API call.

{

"function": {

"name": "create_payment_link",

"parameters": {

"amount": 750,

"customer": "cust_128934ddasf9",

"product": "prod_8675309",

"price": "prc_09874329fds",

"quantity": 1,

"memo": "Hey Jeff - see below for the payment link for the February AI Tinkerers meetup"

}

}

}

The key to making this work reliably is using deterministic code to handle the structured output from your language model. Always validate API payloads before execution to prevent errors, and ensure your LLM returns consistent JSON formats that can be reliably parsed.

2. Own Your Prompts Completely

Your prompts are the interface between your application and the language model—treat them as first-class code. While frameworks that abstract prompts might seem convenient, they often obscure how instructions are passed to the LLM, making fine-tuning difficult or impossible.

Instead, maintain direct control over your prompts by writing them explicitly:

function DetermineNextStep(thread: string) -> DoneForNow | ListGitTags | DeployBackend | DeployFrontend | RequestMoreInformation {

prompt #"

{{ _.role("system") }}

You are a helpful assistant that manages deployments for frontend and backend systems.

...

{{ _.role("user") }}

{{ thread }}

What should the next step be?

"#

}

This approach gives you several advantages:

- Full control to write precise instructions tailored to your specific use case

- Ability to build evaluations and tests for prompts like any other code

- Transparency to understand exactly what the LLM receives

- Freedom to iterate based on performance metrics

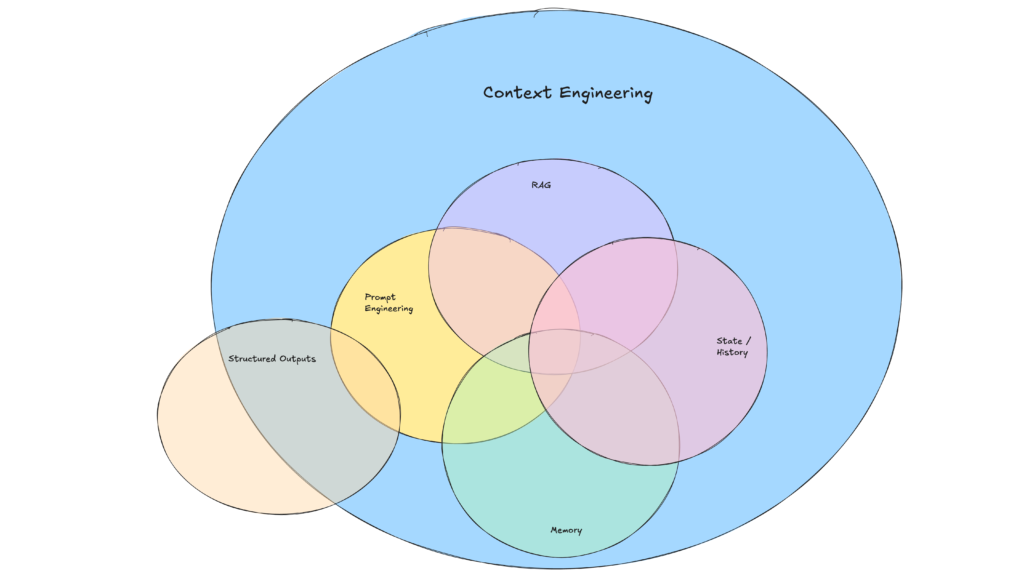

3. Design Your Context Window Strategically

The context window serves as the LLM’s input, encompassing prompts, conversation history, and external data. Optimizing this window enhances performance and token efficiency.

Move beyond standard message-based formats to custom structures that maximize information density:

<slack_message>

From: @alex

Channel: #deployments

Text: Can you deploy the backend?

</slack_message>

<list_git_tags>

intent: "list_git_tags"

</list_git_tags>

<list_git_tags_result>

tags:

- name: "v1.2.3"

commit: "abc123"

date: "2024-03-15T10:00:00Z"

</list_git_tags_result>

This approach offers several benefits:

- Reduced token usage with compact formats

- Better filtering of sensitive data before passing to the LLM

- Flexibility to experiment with formats that improve LLM understanding

4. Implement Tools as Structured Outputs

At their core, tools are simply JSON outputs from the LLM that trigger deterministic actions in your code. This creates a clean separation between AI decision-making and execution logic.

Define tool schemas clearly:

class CreateIssue {

intent: "create_issue";

issue: {

title: string;

description: string;

team_id: string;

assignee_id: string;

};

}

class SearchIssues {

intent: "search_issues";

query: string;

what_youre_looking_for: string;

}

Then build reliable parsing for LLM JSON outputs, use deterministic code to execute the actions, and feed results back into the context for iterative workflows.

5. Unify Execution and Business State

Many agent frameworks separate execution state (e.g., current step in a process) from business state (e.g., history of tool calls and their results). This separation adds unnecessary complexity.

Instead, store all state directly in the context window, inferring execution state from the sequence of events:

<deploy_backend>

intent: "deploy_backend"

tag: "v1.2.3"

environment: "production"

</deploy_backend>

<error>

error running deploy_backend: Failed to connect to deployment service

</error>

This unified approach provides:

- Simplicity with one source of truth for state

- Better debugging with the full history in one place

- Easy recovery by resuming from any point by loading the thread

Taking AI Agents to Production

6. Design APIs for Launch, Pause, and Resume

Production-grade agents need to integrate seamlessly with external systems, pausing for long-running tasks and resuming when triggered by webhooks or other events.

Implement APIs that allow launching, pausing, and resuming agents, with robust state storage between operations. This enables:

- Flexible support for asynchronous workflows

- Clean integration with webhooks and other systems

- Reliable resumption after interruptions without restarting

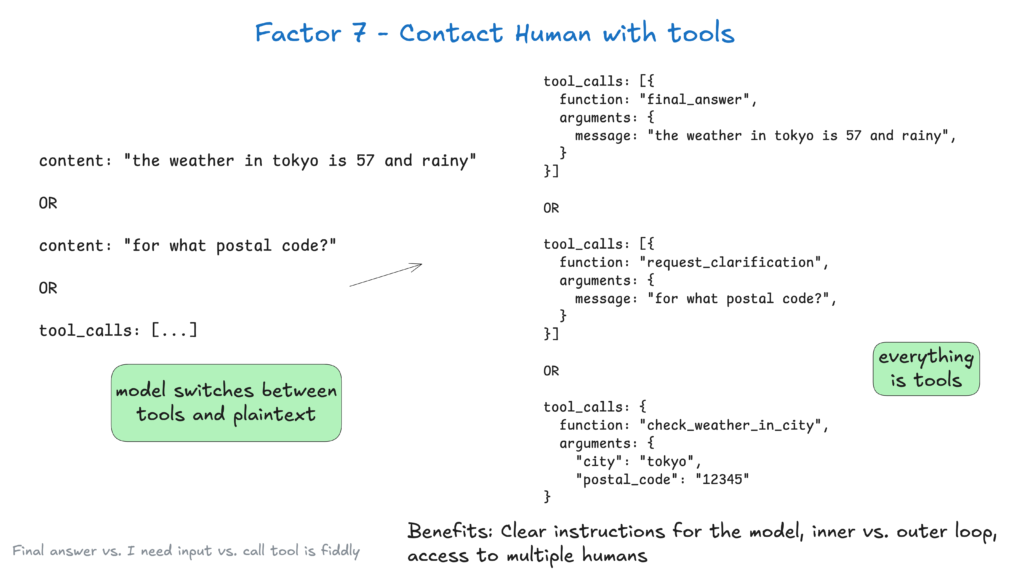

7. Enable Human Collaboration Through Tool Calls

AI agents frequently need human input for high-stakes decisions or ambiguous situations. Using structured tool calls makes this interaction seamless:

class RequestHumanInput {

intent: "request_human_input";

question: string;

context: string;

options: {

urgency: "low" | "medium" | "high";

format: "free_text" | "yes_no" | "multiple_choice";

choices: string[];

};

}

This approach provides clear specification of interaction type and urgency, supports input from multiple users, and combines well with APIs for durable workflows.

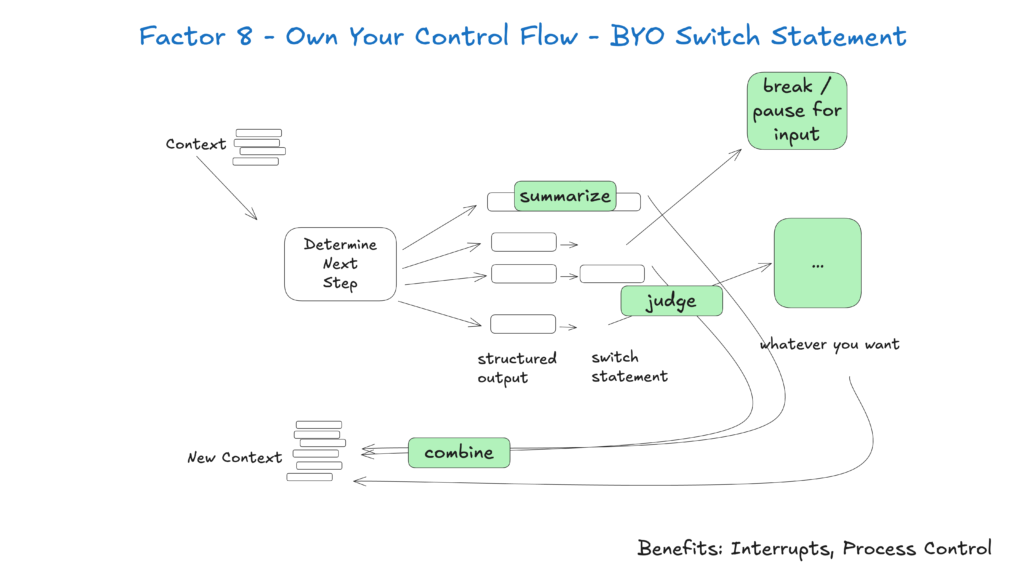

8. Control Your Agent’s Flow

Custom control flow allows you to pause for human approval, cache results, or implement rate limiting—tailoring the agent’s behavior to your specific needs:

async function handleNextStep(thread: Thread) {

while (true) {

const nextStep = await determineNextStep(threadToPrompt(thread));

if (nextStep.intent === 'request_clarification') {

await sendMessageToHuman(nextStep);

await db.saveThread(thread);

break;

} else if (nextStep.intent === 'fetch_open_issues') {

const issues = await linearClient.issues();

thread.events.push({ type: 'fetch_open_issues_result', data: issues });

continue;

}

}

}

With this approach, you gain:

- Interruptibility to pause for human review before critical actions

- Customization options for logging, caching, or summarization

- Reliable handling of long-running tasks

9. Compact Errors into Context for Self-Healing

Including errors directly in the context window enables AI agents to learn from failures and adjust their approach:

try {

const result = await handleNextStep(thread, nextStep);

thread.events.push({ type: `${nextStep.intent}_result`, data: result });

} catch (e) {

thread.events.push({ type: 'error', data: formatError(e) });

}

For this to work effectively:

- Limit retries to prevent infinite loops

- Escalate to humans after repeated failures

- Format errors clearly so the LLM can understand what went wrong

Architectural Best Practices

10. Build Small, Focused Agents

Small agents that handle 3–20 steps maintain manageable context windows, improving LLM performance and reliability. This approach provides:

- Clarity with well-defined scope for each agent

- Reduced risk of the agent losing focus

- Easier testing and validation of specific functions

As LLMs continue to improve, these small agents can expand their scope while maintaining quality, ensuring long-term scalability.

11. Enable Triggers from Multiple Sources

Make your agents accessible by allowing triggers from Slack, email, or event systems—meeting users where they already work.

Implement APIs that launch agents from various channels and respond via the same medium. This enables:

- Better accessibility by integrating with user-preferred platforms

- Support for event-driven automation workflows

- Human approval workflows for high-stakes operations

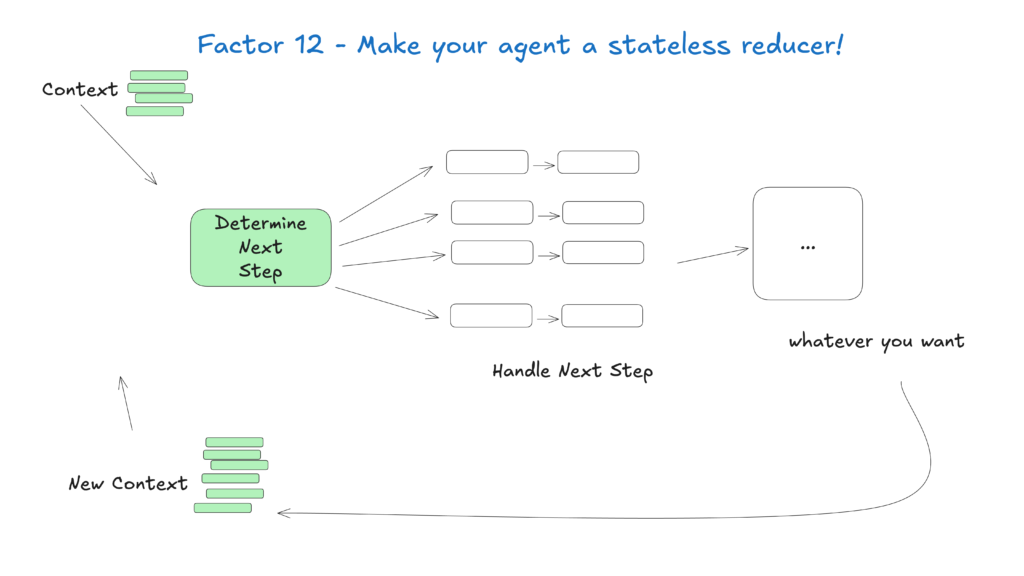

12. Design Agents as Stateless Reducers

Treating agents as stateless functions that transform input context into output actions simplifies state management, making them predictable and easier to debug.

This conceptual approach views agents as pure functions that maintain no internal state, providing:

- Predictable behavior for given inputs

- Easier tracing of issues through the context history

- Simpler testing and validation

Building for the Future

The field of AI agents is evolving rapidly, but these core principles will remain relevant even as the underlying models improve. By starting with small, focused agents that follow these practices, you can create systems that deliver value today while adapting to future advancements.

Remember that the most effective AI agents combine the reasoning capabilities of language models with the reliability of deterministic code—and these 12 factors help you strike that balance.

How FlowHunt Applied the 12-Factor Methodology

At FlowHunt, we’ve put these principles into practice by developing our own AI agent that automatically creates workflow automations for our customers. Here’s how we applied the 12-factor methodology to build a reliable, production-ready system

Frequently asked questions

- What is the 12-Factor AI Agent methodology?

The 12-Factor AI Agent methodology is a set of best practices inspired by the 12-factor app model, designed to help developers build robust, maintainable, and scalable AI agents that perform reliably in real-world production environments.

- Why is context management important for AI agents?

Context management ensures that AI agents maintain relevant conversation history, prompts, and state, optimizing performance, reducing token usage, and improving decision-making accuracy.

- How do FlowHunt AI agents enable human collaboration?

FlowHunt AI agents structure tool calls to request human input when needed, allowing seamless collaboration, approvals, and durable workflows for complex or high-stakes scenarios.

- What are the benefits of designing stateless AI agents?

Stateless AI agents are predictable, easier to debug, and simpler to scale because they transform input context into output actions without maintaining hidden internal state.

Arshia is an AI Workflow Engineer at FlowHunt. With a background in computer science and a passion for AI, she specializes in creating efficient workflows that integrate AI tools into everyday tasks, enhancing productivity and creativity.

Build Scalable AI Agents with FlowHunt

Ready to create robust, production-ready AI agents? Discover FlowHunt's tools and see how the 12-factor methodology can transform your automation.