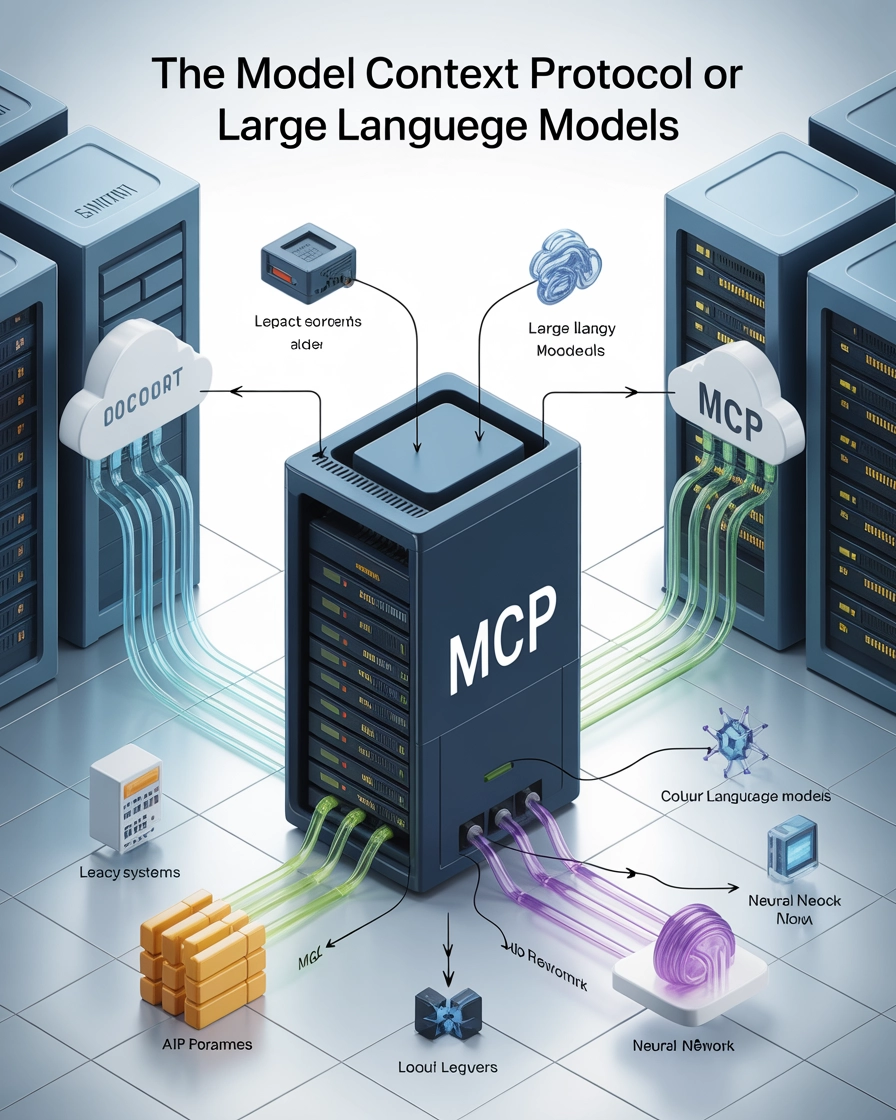

MCP: Model Context Protocol

MCP standardizes secure LLM access to external data, tools, and plugins, enabling flexible, powerful AI integration and interoperability.

Definition

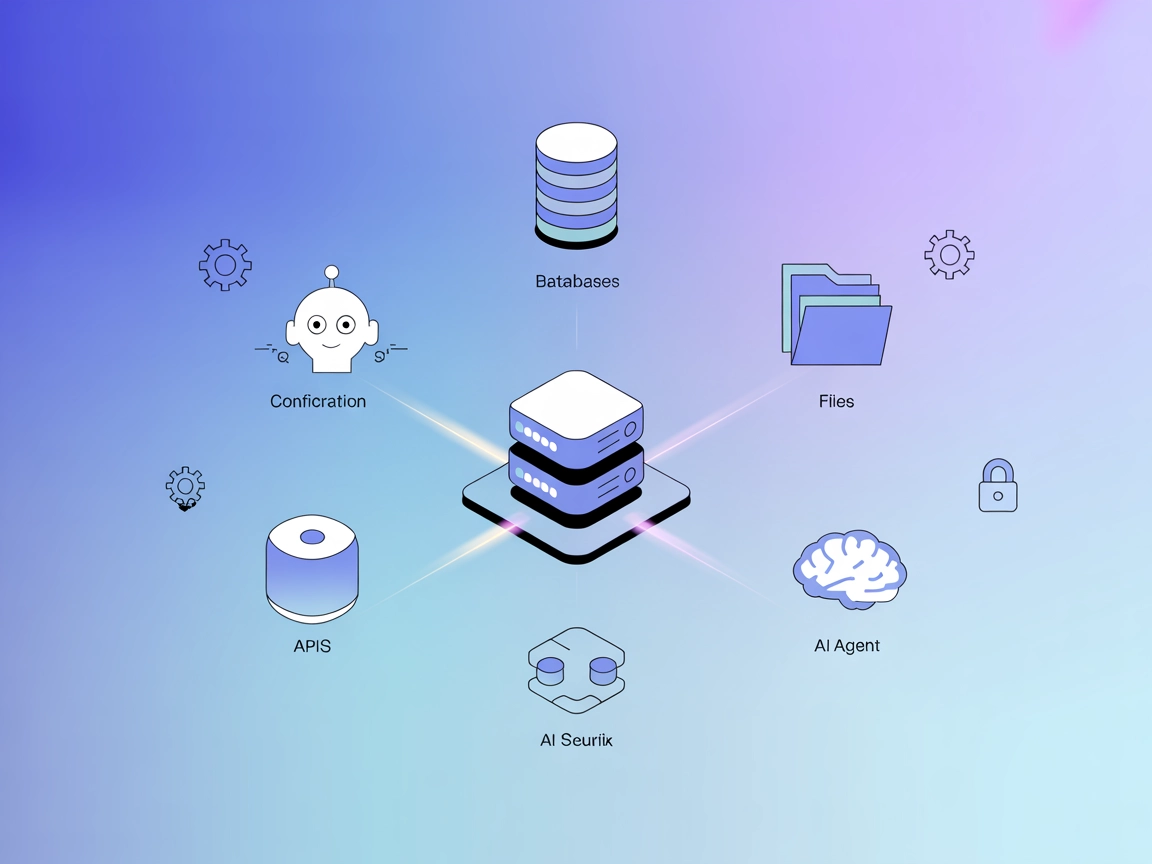

The Model Context Protocol (MCP) is an open standard interface that enables Large Language Models (LLMs) to securely and consistently access external data sources, tools, and capabilities. It establishes a standardized communication layer between AI applications and various context providers, serving as the “USB-C” for AI systems.

Key Components

Architecture

MCP follows a client-server architecture:

- MCP Hosts: Applications where users or AI systems interact (e.g., Claude Desktop, IDE plugins)

- MCP Clients: Components within host applications that handle communication with servers

- MCP Servers: Lightweight programs exposing specific capabilities (file access, database connections, API access) through the standardized MCP interface

- Data Sources: Local or remote information repositories that MCP servers can securely access

Core Primitives

MCP defines three fundamental primitives that form the building blocks of the protocol:

1. Resources

Resources represent data and content that MCP servers make available to LLMs.

- Characteristics: Application-controlled, identified by unique URIs

- Data Types: Text (UTF-8 encoded) or Binary (Base64 encoded)

- Discovery Methods: Direct listing or templates for dynamic resource requests

- Operations: Reading content, receiving updates

Example use case: An MCP server exposing a log file as a resource with URI file:///logs/app.log

2. Prompts

Prompts are predefined templates or workflows that servers offer to guide LLM interactions.

- Characteristics: User-triggered, often appearing as slash commands

- Structure: Unique name, description, optional arguments

- Capabilities: Accept customization arguments, incorporate resource context, define multi-step interactions

- Operations: Discovery through listing, execution by request

Example use case: A git commit message generator prompt that accepts code changes as input

3. Tools

Tools expose executable functions that LLMs can invoke (usually with user approval) to perform actions.

- Characteristics: Model-controlled, requiring well-defined input schemas

- Annotations: Include hints about behavior (read-only, destructive, idempotent, open-world)

- Security Features: Input validation, access control, clear user warnings

- Operations: Discovery through listing, execution through calls with parameters

Example use case: A calculator tool that performs mathematical operations on model-provided inputs

Importance and Benefits

For Developers

- Standardized Integration: Connect AI applications to diverse data sources without custom code for each

- Security Best Practices: Built-in guidelines for safely exposing sensitive information

- Simplified Architecture: Clear separation between AI models and their context sources

For Users and Organizations

- Flexibility: Easier to switch between different LLM providers or host applications

- Interoperability: Reduced vendor lock-in through standardized interfaces

- Enhanced Capabilities: AI systems gain access to more diverse information and action capabilities

Implementation Examples

File Resource Server

// Server exposing a single log file as a resource

const server = new Server({ /* config */ }, { capabilities: { resources: {} } });

// List available resources

server.setRequestHandler(ListResourcesRequestSchema, async () => {

return {

resources: [

{

uri: "file:///logs/app.log",

name: "Application Logs",

mimeType: "text/plain"

}

]

};

});

// Provide resource content

server.setRequestHandler(ReadResourceRequestSchema, async (request) => {

if (request.params.uri === "file:///logs/app.log") {

const logContents = await readLogFile();

return {

contents: [{

uri: request.params.uri,

mimeType: "text/plain",

text: logContents

}]

};

}

throw new Error("Resource not found");

});

Calculator Tool Server

const server = new Server({ /* config */ }, { capabilities: { tools: {} } });

// List available tools

server.setRequestHandler(ListToolsRequestSchema, async () => {

return {

tools: [{

name: "calculate_sum",

description: "Add two numbers together",

inputSchema: {

type: "object",

properties: {

a: { type: "number", description: "First number" },

b: { type: "number", description: "Second number" }

},

required: ["a", "b"]

},

annotations: {

title: "Calculate Sum",

readOnlyHint: true,

openWorldHint: false

}

}]

};

});

// Handle tool execution

server.setRequestHandler(CallToolRequestSchema, async (request) => {

if (request.params.name === "calculate_sum") {

try {

const { a, b } = request.params.arguments;

if (typeof a !== 'number' || typeof b !== 'number') {

throw new Error("Invalid input: 'a' and 'b' must be numbers.");

}

const sum = a + b;

return {

content: [{ type: "text", text: String(sum) }]

};

} catch (error: any) {

return {

isError: true,

content: [{ type: "text", text: `Error calculating sum: ${error.message}` }]

};

}

}

throw new Error("Tool not found");

});

Related Concepts

- LLM Function Calling: Model Context Protocol provides a standardized approach to the concept of LLMs invoking functions

- AI Agents: MCP offers a structured way for agent-based AI systems to access tools and information

- AI Plugins: Similar to browser extensions, MCP servers can be thought of as “plugins” that extend AI capabilities

Future Directions

- Enterprise AI Integration: Connecting corporate knowledge bases, tools, and workflows

- Multi-modal AI: Standardizing access to diverse types of data beyond text

- Collaborative AI Systems: Enabling AI assistants to work together through shared protocols

Frequently asked questions

- What is the Model Context Protocol (MCP)?

MCP is an open standard interface that allows LLMs to securely and consistently access external data sources, tools, and capabilities, creating a standardized communication layer between AI applications and context providers.

- What are the key components of MCP?

MCP consists of hosts, clients, servers, and data sources. It uses core primitives—resources, prompts, and tools—to enable flexible and secure interactions between LLMs and external systems.

- What benefits does MCP provide to developers and organizations?

MCP simplifies AI integration, enhances security, reduces vendor lock-in, and enables seamless access to diverse information and tools for both developers and organizations.

- How is MCP implemented in real-world applications?

MCP can be implemented through servers that expose resources or tools (e.g., log file access, calculator tools) using a standardized interface, simplifying connections with AI models.

- How does MCP relate to LLM function calling and AI plugins?

MCP standardizes the process of LLMs invoking external functions or tools, similar to how plugins extend the capabilities of browsers or software.

Try FlowHunt and Build Your Own AI Solutions

Start building powerful AI systems with standardized integrations, secure data access, and flexible tool connectivity using FlowHunt.