LLM Context MCP Server

Seamlessly connect AI agents to code and text projects with the LLM Context MCP Server—optimizing development workflows with secure, context-rich, and automated assistance.

What does “LLM Context” MCP Server do?

LLM Context MCP Server is a tool designed to seamlessly connect AI assistants with external code and text projects, enhancing the development workflow through the Model Context Protocol (MCP). By leveraging .gitignore patterns for intelligent file selection, it allows developers to inject highly relevant content directly into LLM chat interfaces or use a streamlined clipboard workflow. This enables tasks such as code review, documentation generation, and project exploration to be performed efficiently with context-aware AI assistance. LLM Context is particularly effective for both code repositories and collections of textual documents, making it a versatile bridge between project data and AI-powered workflows.

List of Prompts

No information found in the repository regarding defined prompt templates.

List of Resources

No explicit resources are mentioned in the provided files or documentation.

List of Tools

No server.py or equivalent file listing tools is present in the visible repository structure. No information about exposed tools could be found.

Use Cases of this MCP Server

- Code Review Automation: Injects relevant code segments into LLM interfaces to assist in automated or assisted code reviews.

- Documentation Generation: Enables AI to access and summarize documentation directly from project files.

- Project Exploration: Assists developers and AI agents in quickly understanding large codebases or text projects by surfacing key files and outlines.

- Clipboard Workflow: Allows users to copy content to and from the clipboard for quick sharing with LLMs, improving productivity in chat-based workflows.

How to set it up

Windsurf

- Ensure you have Node.js and Windsurf installed.

- Locate the Windsurf configuration file (e.g.,

windsurf.config.json). - Add the LLM Context MCP Server using the following JSON snippet:

{

"mcpServers": {

"llm-context": {

"command": "llm-context-mcp",

"args": []

}

}

}

- Save the configuration and restart Windsurf.

- Verify the setup by checking if the MCP server appears in Windsurf.

Claude

- Install Node.js and ensure Claude supports MCP integration.

- Edit Claude’s configuration file to include the MCP server:

{

"mcpServers": {

"llm-context": {

"command": "llm-context-mcp",

"args": []

}

}

}

- Save the file and restart Claude.

- Confirm the server is available in Claude’s MCP settings.

Cursor

- Install any prerequisites for the Cursor editor.

- Open Cursor’s MCP configuration file.

- Add the LLM Context MCP Server:

{

"mcpServers": {

"llm-context": {

"command": "llm-context-mcp",

"args": []

}

}

}

- Save changes and restart Cursor.

- Verify the MCP server is operational.

Cline

- Install Node.js and Cline.

- Edit the Cline configuration to register the MCP server:

{

"mcpServers": {

"llm-context": {

"command": "llm-context-mcp",

"args": []

}

}

}

- Save and restart Cline.

- Check that the MCP server is now accessible.

Securing API Keys

Set environment variables to protect API keys and secrets. Example configuration:

{

"mcpServers": {

"llm-context": {

"command": "llm-context-mcp",

"args": [],

"env": {

"API_KEY": "${LLM_CONTEXT_API_KEY}"

},

"inputs": {

"apiKey": "${LLM_CONTEXT_API_KEY}"

}

}

}

}

How to use this MCP inside flows

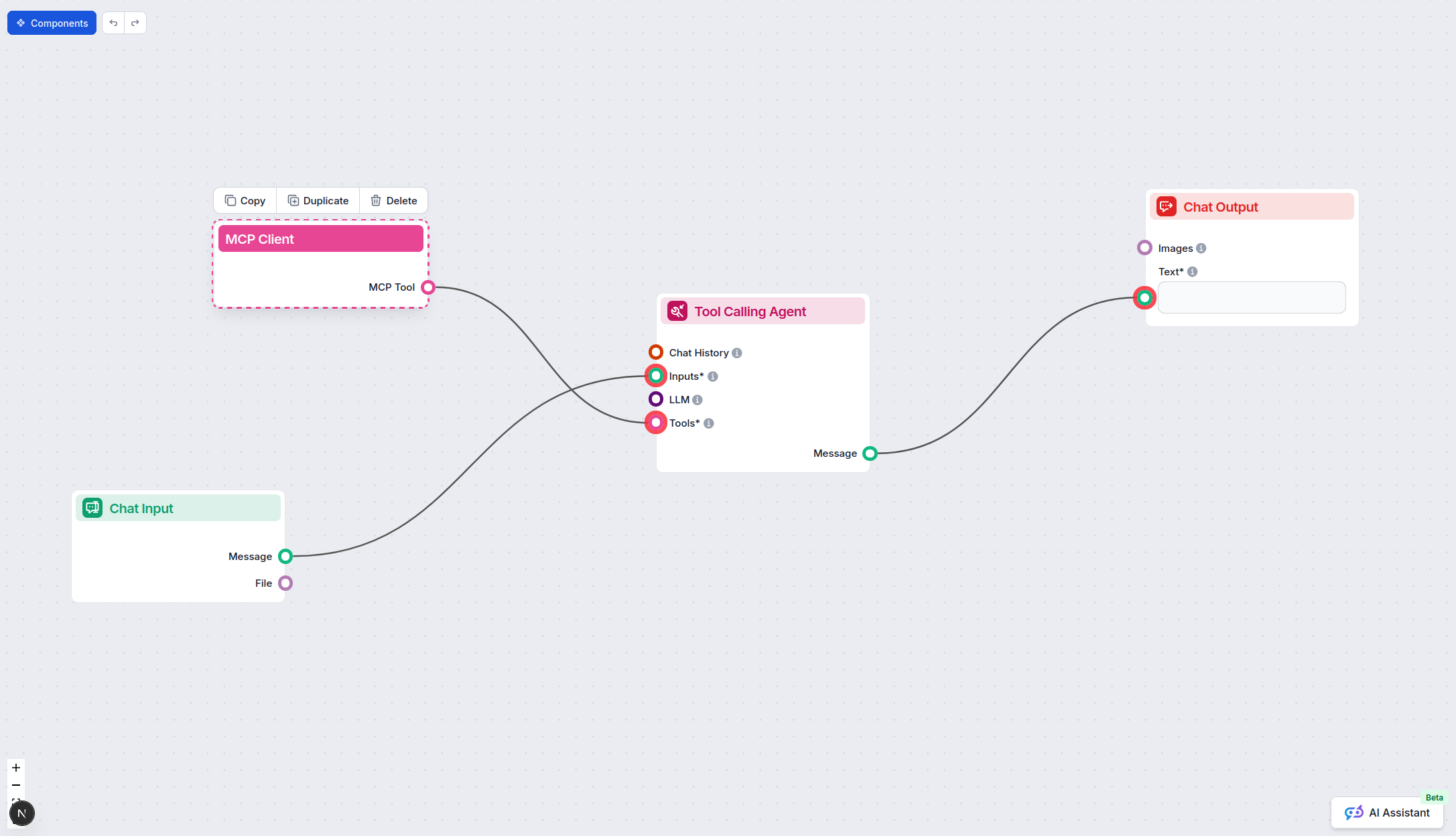

Using MCP in FlowHunt

To integrate MCP servers into your FlowHunt workflow, start by adding the MCP component to your flow and connecting it to your AI agent:

Click on the MCP component to open the configuration panel. In the system MCP configuration section, insert your MCP server details using this JSON format:

{

"llm-context": {

"transport": "streamable_http",

"url": "https://yourmcpserver.example/pathtothemcp/url"

}

}

Once configured, the AI agent is now able to use this MCP as a tool with access to all its functions and capabilities. Remember to change “llm-context” to whatever the actual name of your MCP server is and replace the URL with your own MCP server URL.

Overview

| Section | Availability | Details/Notes |

|---|---|---|

| Overview | ✅ | |

| List of Prompts | ⛔ | No information found |

| List of Resources | ⛔ | No information found |

| List of Tools | ⛔ | No information found |

| Securing API Keys | ✅ | Environment variable example provided |

| Sampling Support (less important in evaluation) | ⛔ | No information found |

Based on the two tables, this MCP server has a strong overview and security best practices but lacks clear documentation for prompts, resources, and tools. As such, it is most useful for basic context-sharing workflows and requires further documentation to fully leverage MCP’s advanced features.

MCP Score

| Has a LICENSE | ✅ (Apache-2.0) |

|---|---|

| Has at least one tool | ⛔ |

| Number of Forks | 18 |

| Number of Stars | 231 |

Frequently asked questions

- What is the LLM Context MCP Server?

The LLM Context MCP Server connects AI agents to external code and text projects, providing intelligent context selection via .gitignore patterns and enabling advanced workflows like code review, documentation generation, and project exploration directly within LLM chat interfaces.

- What are the primary use cases for this MCP Server?

Key use cases include code review automation, documentation generation, rapid project exploration, and clipboard-based content sharing with LLMs for productivity boosts in chat-based workflows.

- How do I securely configure API keys for the LLM Context MCP Server?

Set environment variables with your API keys (e.g., LLM_CONTEXT_API_KEY) and reference them in your MCP server configuration to keep your keys out of source code and configuration files.

- Does the server come with prompt templates or built-in tools?

No, the current version lacks defined prompts and explicit tools, making it ideal for basic context-sharing workflows but requiring further customization for more advanced features.

- What license does the LLM Context MCP Server use?

This server is open-source under the Apache-2.0 license.

- How do I use the LLM Context MCP Server in FlowHunt?

Add the MCP component to your FlowHunt flow, enter the MCP server details in the configuration panel using the provided JSON format, and connect it to your AI agent for enhanced, context-aware automation.

Boost Your AI Workflow with LLM Context MCP

Integrate the LLM Context MCP Server into FlowHunt for smarter, context-aware automation in your coding and documentation processes.