mcp-proxy MCP Server

Connect AI assistants to tools and systems across different MCP transport protocols using the mcp-proxy MCP Server for FlowHunt.

What does “mcp-proxy” MCP Server do?

The mcp-proxy MCP Server acts as a bridge between Streamable HTTP and stdio MCP transports, enabling seamless communication between AI assistants and different types of Model Context Protocol (MCP) servers or clients. Its core function is to translate between these two widely used transport protocols, allowing tools, resources, and workflows designed for one protocol to be accessed via the other without modification. This enhances development workflows by making it possible for AI assistants to interact with external data sources, APIs, or services that use different transport mechanisms, thus enabling tasks such as database queries, file management, or API interactions across diverse systems.

List of Prompts

No prompt templates are mentioned in the repository.

List of Resources

No explicit MCP resources are described in the repository documentation or code.

List of Tools

No tools are defined in the repository’s documentation or visible code (e.g., no explicit functions, tools, or server.py with tool definitions present).

Use Cases of this MCP Server

- Protocol Bridging: Allows MCP clients using stdio transport to communicate with servers using Streamable HTTP, and vice versa, expanding interoperability.

- Legacy System Integration: Facilitates integration of legacy MCP tools or servers with modern HTTP-based AI platforms, reducing redevelopment effort.

- AI Workflow Enhancement: Enables AI assistants to access a broader range of tools and services by bridging protocol gaps, enriching possible actions and data sources.

- Cross-Platform Development: Makes it easier to develop and test MCP-based tools across environments that prefer different transports, improving developer flexibility.

How to set it up

Windsurf

- Ensure Python is installed on your system.

- Clone the

mcp-proxyrepository or install via PyPI if available. - Edit your Windsurf configuration file to add the mcp-proxy MCP server.

- Use the following JSON snippet in your configuration:

{ "mcpServers": { "mcp-proxy": { "command": "mcp-proxy", "args": [] } } } - Restart Windsurf and verify that the mcp-proxy server is running.

Claude

- Ensure Python is installed.

- Clone or install the mcp-proxy server.

- Open Claude’s configuration/settings for MCP servers.

- Add the following configuration:

{ "mcpServers": { "mcp-proxy": { "command": "mcp-proxy", "args": [] } } } - Save and restart Claude, then verify connectivity.

Cursor

- Install Python and the mcp-proxy package.

- Open Cursor’s extension or MCP server settings.

- Add this configuration:

{ "mcpServers": { "mcp-proxy": { "command": "mcp-proxy", "args": [] } } } - Save changes and restart Cursor.

Cline

- Ensure Python is installed.

- Install mcp-proxy via PyPI or clone the repo.

- Edit the Cline configuration file:

{ "mcpServers": { "mcp-proxy": { "command": "mcp-proxy", "args": [] } } } - Save and restart Cline.

Securing API Keys

You can secure environment variables (e.g., API keys) by using env in your configuration:

{

"mcpServers": {

"mcp-proxy": {

"command": "mcp-proxy",

"args": [],

"env": {

"API_KEY": "${API_KEY}"

},

"inputs": {

"api_key": "${API_KEY}"

}

}

}

}

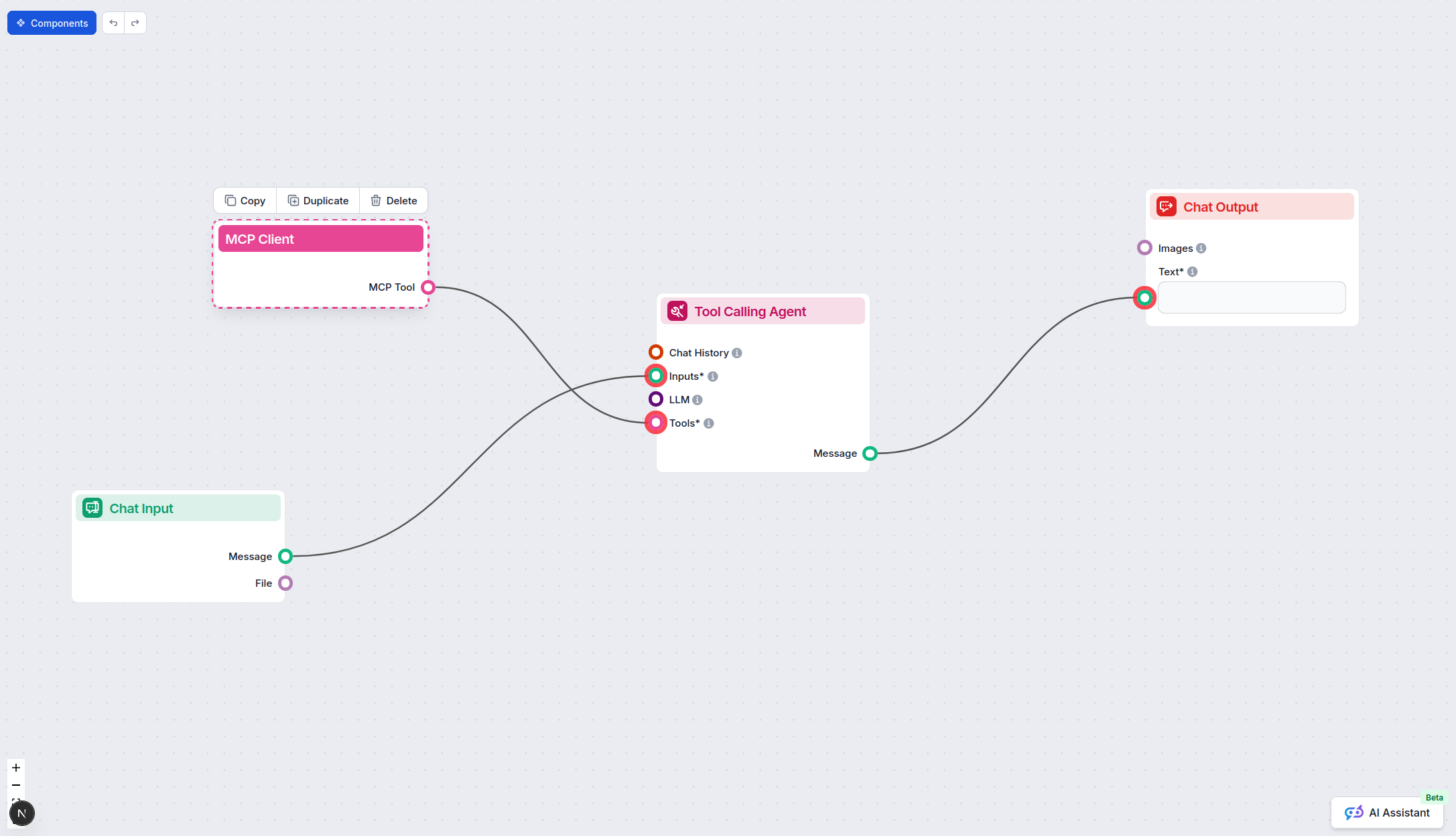

How to use this MCP inside flows

Using MCP in FlowHunt

To integrate MCP servers into your FlowHunt workflow, start by adding the MCP component to your flow and connecting it to your AI agent:

Click on the MCP component to open the configuration panel. In the system MCP configuration section, insert your MCP server details using this JSON format:

{

"mcp-proxy": {

"transport": "streamable_http",

"url": "https://yourmcpserver.example/pathtothemcp/url"

}

}

Once configured, the AI agent is now able to use this MCP as a tool with access to all its functions and capabilities. Remember to change “mcp-proxy” to whatever the actual name of your MCP server is and replace the URL with your own MCP server URL.

Overview

| Section | Availability | Details/Notes |

|---|---|---|

| Overview | ✅ | |

| List of Prompts | ⛔ | None found |

| List of Resources | ⛔ | None found |

| List of Tools | ⛔ | No explicit tools defined |

| Securing API Keys | ✅ | Via env in config |

| Sampling Support (less important in evaluation) | ⛔ | No mention |

| Roots Support | ⛔ | No mention |

Based on the above, mcp-proxy is highly specialized for protocol translation but does not provide tools, prompts, or resources out of the box. Its value lies in integration and connectivity, not in providing direct LLM utilities.

Our opinion

mcp-proxy is a critical utility for bridging MCP transport protocols, making it highly valuable in environments where protocol mismatches limit AI/LLM tool interoperability. However, it does not provide direct LLM enhancements like resources, prompts, or tools. For its intended use-case, it’s a robust, well-supported project. Rating: 6/10 for general MCP utility, 9/10 if you specifically need protocol bridging.

MCP Score

| Has a LICENSE | ✅ (MIT) |

|---|---|

| Has at least one tool | ⛔ |

| Number of Forks | 128 |

| Number of Stars | 1.1k |

Frequently asked questions

- What does the mcp-proxy MCP Server do?

The mcp-proxy MCP Server bridges Streamable HTTP and stdio MCP transports, enabling seamless communication between AI assistants and a variety of MCP servers or clients. This allows workflows and tools built for different protocols to work together without modification.

- What are some use cases for the mcp-proxy MCP Server?

mcp-proxy is ideal for protocol bridging between different MCP transports, integrating legacy systems with modern AI platforms, enhancing AI workflow connectivity, and supporting cross-platform development and testing.

- Does mcp-proxy provide tools or prompt resources?

No, mcp-proxy focuses solely on protocol translation and does not provide built-in tools, prompt templates, or resources. Its value is in enabling interoperability and integration.

- How do I secure API keys with mcp-proxy?

You can use environment variables within your MCP server configuration to secure API keys. For example, use an 'env' block and reference variables in your configuration JSON.

- How do I use mcp-proxy in FlowHunt?

Add the MCP component to your FlowHunt flow, then configure the mcp-proxy MCP server in the system MCP configuration using the appropriate JSON snippet. This enables your AI agent to access all capabilities made available by the bridged MCP protocols.

Try mcp-proxy with FlowHunt

Bridge your AI workflow gaps and enable seamless protocol interoperability with mcp-proxy. Integrate legacy systems and enhance your AI's reach instantly.